When thinking about changes in phases of matter, the first images that come to mind might be ice melting or water boiling. The critical point in these processes is located at the boundary between the two phases – the transition from solid to liquid or from liquid to gas.

Phase transitions like these get right to the heart of how large material systems behave and are at the frontier of research in condensed matter physics for their ability to provide insights into emergent phenomena like magnetism and topological order. In classical systems, phase transitions are generally driven by thermal fluctuations and occur at finite temperature. On the contrary, quantum systems can exhibit phase transitions even at zero temperatures; the residual fluctuations that control such phase transitions at zero temperature are due to entanglement and are entirely quantum in origin.

Quantinuum researchers recently used the H1-1 quantum computer to computationally model a group of highly correlated quantum particles at a quantum critical point — on the border of a transition between a paramagnetic state (a state of magnetism characterized by a weak attraction) to a ferromagnetic one (characterized by a strong attraction).

Simulating such a transition on a classical computer is possible using tensor network methods, though it is difficult. However, generalizations of such physics to more complicated systems can pose serious problems to classical tensor network techniques, even when deployed on the most powerful supercomputers. On a quantum computer, on the other hand, such generalizations will likely only require modest increases in the number and quality of available qubits.

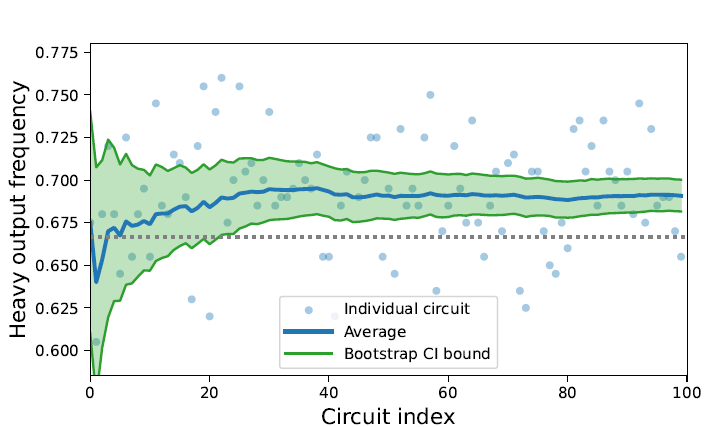

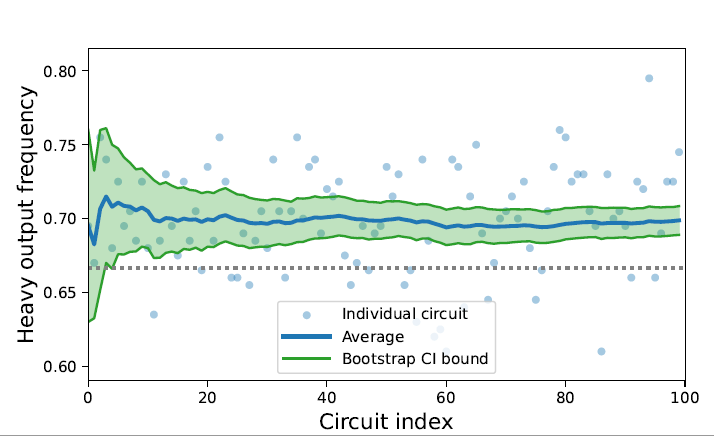

In a technical paper submitted to the arXiv, Probing critical states of matter on a digital quantum computer, the Quantinuum team demonstrated how the powerful components and high fidelity of the H-Series digital quantum computers could be harnessed to tackle a 128-site condensed matter physics problem, combining a quantum tensor network method with qubit reuse to make highly productive use of the 20-qubit H1-1 quantum computer.

Reza Haghshenas, Senior Advanced Physicist, and the lead author the paper said, “This is the kind of problem that appeals to condensed-matter physicists working with quantum computers, who are looking forward to revealing exotic aspects of strongly correlated systems that are still unknown to the classical realm. Digital quantum computers have the potential to become a versatile tool for working scientists, particularly in fields like condensed matter and particle physics, and may open entirely new directions in fundamental research.”

The role of quantum tensor networks

Tensor networks are mathematical frameworks whose structure enables them to represent and manipulate quantum states in an efficient manner. Originally associated with the mathematics of quantum mechanics, tensor network methods now crop up in many places, from machine learning to natural language processing, or indeed any model with a large number of interacting, high-dimensional mathematical objects.

The Quantinuum team described using a tensor network method--the multi-scale entanglement renormalization ansatz (MERA)--to produce accurate estimates for the decay of ferromagnetic correlations and the ground state energy of the system. MERA is particularly well-suited to studying scale invariant quantum states, such as ground states at continuous quantum phase transitions, where each layer in the mathematical model captures entanglement at different scales of distance.

“By calculating the critical state properties with MERA on a digital quantum computer like the H-Series, we have shown that research teams can program the connectivity and system interactions into the problem,” said Dave Hayes, Lead of the U.S. quantum theory team at Quantinuum and one of the paper’s authors. “So, it can, in principle, go out and simulate any system that you can dream of.”

Simulating a highly entangled quantum spin model

In this experiment, the researchers wanted to accurately calculate the ground state of the quantum system in its critical state. This quantum system is composed of many tiny quantum magnets interacting with one another and pointing in different directions, known as a quantum spin model. In the paramagnetic phase, tiny, individual magnets in the material are randomly oriented, and only correlated with each other over small length-scales. In the ferromagnetic phase, these individual atomic magnetic moments align spontaneously over macroscopic length scales due to strong magnetic interactions.

In the computational model, the quantum magnets were initially arranged in one dimension, along a line. To describe the critical point in this quantum magnetism problem, particles in the line needed to be entangled with one another in a complex way, making this as a very challenging problem for a classical computer to solve in high dimensional and non-equilibrium systems.

“That's as hard as it gets for these systems,” Dave explained. “So that's where we want to look for quantum advantage – because we want the problem to be as hard as possible on the classical computer, and then have a quantum computer solve it.”

To improve the results, the team used two error mitigation techniques, symmetry-based error heralding, which is made possible by the MERA structure, and zero-noise extrapolation, a method originally developed by researchers at IBM. The first involved enforcing local symmetry in the model so that errors affecting the symmetry of the state could be detected. The second strategy, zero-noise extrapolation, involves adding noise to the qubits to measure the impact it has, and then using those results to extrapolate the results that would be expected under conditions with less noise than was present in the experiment.

Future applications

The Quantinuum team describes this sort of problem as a stepping-stone, which allows the researchers to explore quantum tensor network methods on today’s devices and compare them either to simulations or analytical results produced using classical computers. It is a chance to learn how to tackle a problem really well before quantum computers scale up in the future and begin to offer solutions that are not possible to achieve on classical computers.

“Potentially, our biggest applications over the next couple of years will include studying solid-state systems, physics systems, many-body systems, and modeling them,” said Jenni Strabley, Senior Director of Offering Management at Quantinuum.

The team now looks forward to future work, exploring more complex MERA generalizations to compute the states of 2D and 3D many-body and condensed matter systems on a digital quantum computer – quantum states that are much more difficult to calculate classically.

The H-Series allows researchers to simulate a much broader range of systems than analog devices as well as to incorporate quantum error mitigation strategies, as demonstrated in the experiment. Plus, Quantinuum’s System Model H2 quantum computer, which was launched earlier this year, should scale this type of simulation beyond what is possible using classical computers.